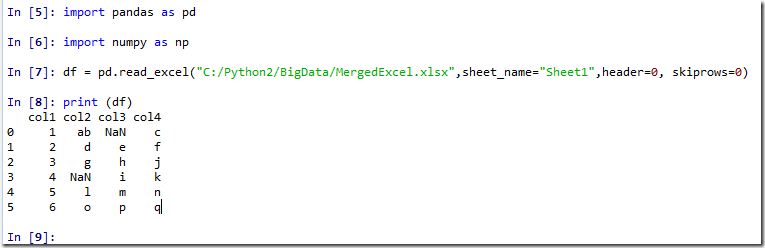

import pandas as pd

import numpy as np

df = pd.read_excel("C:/Python2/BigData/MergedExcel.xlsx",sheet_name="Sheet1",header=0, skiprows=0)

print (df)

Tuesday, February 27, 2018

How merged Cells in Excel being handled by Pandas data frame

Monday, February 26, 2018

Hive Beeline default configuration for CDH 5.14

[donghua@cdh-vm scripts]$ beeline -u jdbc:hive2://cdh-vm.dbaglobe.com:10000/test -n donghua << EOD |grep -v hive-exec-core.jar

> ! set headerinterval 10000

> ! set outputformat csv2

> set

> EOD

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

scan complete in 1ms

Connecting to jdbc:hive2://cdh-vm.dbaglobe.com:10000/test

Connected to: Apache Hive (version 1.1.0-cdh5.14.0)

Driver: Hive JDBC (version 1.1.0-cdh5.14.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 1.1.0-cdh5.14.0 by Apache Hive

0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/tes> ! set headerinterval 10000

0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/tes> ! set outputformat csv2

0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/tes> set

. . . . . . . . . . . . . . . . . . . . . . .> set

_hive.hdfs.session.path=/tmp/hive/donghua/d4b067f0-b697-48ff-8223-3f4b527f090c

_hive.local.session.path=/tmp/hive/d4b067f0-b697-48ff-8223-3f4b527f090c

_hive.tmp_table_space=/tmp/hive/donghua/d4b067f0-b697-48ff-8223-3f4b527f090c/_tmp_space.db

datanucleus.autoCreateSchema=true

datanucleus.autoStartMechanismMode=checked

datanucleus.cache.level2=false

datanucleus.cache.level2.type=none

datanucleus.connectionPoolingType=BONECP

datanucleus.fixedDatastore=false

datanucleus.identifierFactory=datanucleus1

datanucleus.plugin.pluginRegistryBundleCheck=LOG

datanucleus.rdbms.useLegacyNativeValueStrategy=true

datanucleus.storeManagerType=rdbms

datanucleus.transactionIsolation=read-committed

datanucleus.validateColumns=false

datanucleus.validateConstraints=false

datanucleus.validateTables=false

fs.har.impl=org.apache.hadoop.hive.shims.HiveHarFileSystem

fs.scheme.class=dfs

hadoop.bin.path=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop/bin/hadoop

hive.analyze.stmt.collect.partlevel.stats=true

hive.archive.enabled=false

hive.auto.convert.join=true

hive.auto.convert.join.noconditionaltask=true

hive.auto.convert.join.noconditionaltask.size=20971520

hive.auto.convert.join.use.nonstaged=false

hive.auto.convert.sortmerge.join=false

hive.auto.convert.sortmerge.join.bigtable.selection.policy=org.apache.hadoop.hive.ql.optimizer.AvgPartitionSizeBasedBigTableSelectorForAutoSMJ

hive.auto.convert.sortmerge.join.to.mapjoin=false

hive.auto.progress.timeout=0s

hive.autogen.columnalias.prefix.includefuncname=false

hive.autogen.columnalias.prefix.label=_c

hive.binary.record.max.length=1000

hive.blobstore.optimizations.enabled=true

hive.blobstore.supported.schemes=s3,s3a,s3n

hive.blobstore.use.blobstore.as.scratchdir=false

hive.cache.expr.evaluation=true

hive.cbo.enable=false

hive.cli.errors.ignore=false

hive.cli.pretty.output.num.cols=-1

hive.cli.print.current.db=false

hive.cli.print.header=false

hive.cli.prompt=hive

hive.cluster.delegation.token.store.class=org.apache.hadoop.hive.thrift.MemoryTokenStore

hive.cluster.delegation.token.store.zookeeper.znode=/hivedelegation

hive.compactor.abortedtxn.threshold=1000

hive.compactor.check.interval=300s

hive.compactor.cleaner.run.interval=5000ms

hive.compactor.delta.num.threshold=10

hive.compactor.delta.pct.threshold=0.1

hive.compactor.initiator.on=false

hive.compactor.worker.threads=0

hive.compactor.worker.timeout=86400s

hive.compat=0.12

hive.compute.query.using.stats=false

hive.compute.splits.in.am=true

hive.conf.hidden.list=javax.jdo.option.ConnectionPassword,hive.server2.keystore.password,fs.s3.awsAccessKeyId,fs.s3.awsSecretAccessKey,fs.s3n.awsAccessKeyId,fs.s3n.awsSecretAccessKey,fs.s3a.access.key,fs.s3a.secret.key,fs.s3a.proxy.password,dfs.adls.oauth2.credential,fs.adl.oauth2.credential

hive.conf.restricted.list=hive.security.authenticator.manager,hive.security.authorization.manager,hive.users.in.admin.role,hadoop.bin.path,yarn.bin.path,_hive.local.session.path,_hive.hdfs.session.path,_hive.tmp_table_space,_hive.local.session.path,_hive.hdfs.session.path,_hive.tmp_table_space

hive.conf.validation=true

hive.convert.join.bucket.mapjoin.tez=false

hive.counters.group.name=HIVE

hive.debug.localtask=false

hive.decode.partition.name=false

hive.default.fileformat=TextFile

hive.default.rcfile.serde=org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe

hive.default.serde=org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

hive.display.partition.cols.separately=true

hive.downloaded.resources.dir=/tmp/${hive.session.id}_resources

hive.enforce.bucketing=false

hive.enforce.bucketmapjoin=false

hive.enforce.sorting=false

hive.enforce.sortmergebucketmapjoin=false

hive.entity.capture.input.URI=true

hive.entity.capture.transform=false

hive.entity.separator=@

hive.error.on.empty.partition=false

hive.exec.check.crossproducts=true

hive.exec.compress.intermediate=false

hive.exec.compress.output=false

hive.exec.concatenate.check.index=true

hive.exec.copyfile.maxsize=33554432

hive.exec.counters.pull.interval=1000

hive.exec.default.partition.name=__HIVE_DEFAULT_PARTITION__

hive.exec.drop.ignorenonexistent=true

hive.exec.dynamic.partition=true

hive.exec.dynamic.partition.mode=strict

hive.exec.infer.bucket.sort=false

hive.exec.infer.bucket.sort.num.buckets.power.two=false

hive.exec.input.listing.max.threads=15

hive.exec.job.debug.capture.stacktraces=true

hive.exec.job.debug.timeout=30000

hive.exec.local.scratchdir=/tmp/hive

hive.exec.max.created.files=100000

hive.exec.max.dynamic.partitions=1000

hive.exec.max.dynamic.partitions.pernode=100

hive.exec.mode.local.auto=false

hive.exec.mode.local.auto.input.files.max=4

hive.exec.mode.local.auto.inputbytes.max=134217728

hive.exec.orc.block.padding.tolerance=0.05

hive.exec.orc.compression.strategy=SPEED

hive.exec.orc.default.block.padding=true

hive.exec.orc.default.block.size=268435456

hive.exec.orc.default.buffer.size=262144

hive.exec.orc.default.compress=ZLIB

hive.exec.orc.default.row.index.stride=10000

hive.exec.orc.default.stripe.size=67108864

hive.exec.orc.dictionary.key.size.threshold=0.8

hive.exec.orc.encoding.strategy=SPEED

hive.exec.orc.memory.pool=0.5

hive.exec.orc.skip.corrupt.data=false

hive.exec.orc.zerocopy=false

hive.exec.parallel=false

hive.exec.parallel.thread.number=8

hive.exec.perf.logger=org.apache.hadoop.hive.ql.log.PerfLogger

hive.exec.rcfile.use.explicit.header=true

hive.exec.rcfile.use.sync.cache=true

hive.exec.reducers.bytes.per.reducer=67108864

hive.exec.reducers.max=1099

hive.exec.rowoffset=false

hive.exec.scratchdir=/tmp/hive

hive.exec.script.allow.partial.consumption=false

hive.exec.script.maxerrsize=100000

hive.exec.script.trust=false

hive.exec.show.job.failure.debug.info=true

hive.exec.stagingdir=.hive-staging

hive.exec.submit.local.task.via.child=true

hive.exec.submitviachild=false

hive.exec.tasklog.debug.timeout=20000

hive.execution.engine=mr

hive.exim.strict.repl.tables=true

hive.exim.uri.scheme.whitelist=hdfs,pfile,s3,s3a,adl

hive.explain.dependency.append.tasktype=false

hive.fetch.output.serde=org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

hive.fetch.task.aggr=false

hive.fetch.task.conversion=minimal

hive.fetch.task.conversion.threshold=268435456

hive.file.max.footer=100

hive.fileformat.check=true

hive.groupby.mapaggr.checkinterval=100000

hive.groupby.orderby.position.alias=false

hive.groupby.skewindata=false

hive.hashtable.initialCapacity=100000

hive.hashtable.key.count.adjustment=1.0

hive.hashtable.loadfactor=0.75

hive.hbase.generatehfiles=false

hive.hbase.snapshot.restoredir=/tmp

hive.hbase.wal.enabled=true

hive.heartbeat.interval=1000

hive.hmshandler.force.reload.conf=false

hive.hmshandler.retry.attempts=10

hive.hmshandler.retry.interval=2000ms

hive.hwi.listen.host=0.0.0.0

hive.hwi.listen.port=9999

hive.hwi.war.file=${env:HWI_WAR_FILE}

hive.ignore.mapjoin.hint=true

hive.in.test=false

hive.in.test.remove.logs=true

hive.in.test.short.logs=false

hive.in.tez.test=false

hive.index.compact.binary.search=true

hive.index.compact.file.ignore.hdfs=false

hive.index.compact.query.max.entries=10000000

hive.index.compact.query.max.size=10737418240

hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFormat

hive.insert.into.external.tables=true

hive.insert.into.multilevel.dirs=false

hive.io.rcfile.column.number.conf=0

hive.io.rcfile.record.buffer.size=4194304

hive.io.rcfile.record.interval=2147483647

hive.io.rcfile.tolerate.corruptions=false

hive.jobname.length=50

hive.join.cache.size=25000

hive.join.emit.interval=1000

hive.lazysimple.extended_boolean_literal=false

hive.limit.optimize.enable=false

hive.limit.optimize.fetch.max=50000

hive.limit.optimize.limit.file=10

hive.limit.pushdown.memory.usage=0.1

hive.limit.query.max.table.partition=-1

hive.limit.row.max.size=100000

hive.load.dynamic.partitions.thread=15

hive.localize.resource.num.wait.attempts=5

hive.localize.resource.wait.interval=5000ms

hive.lock.manager=org.apache.hadoop.hive.ql.lockmgr.zookeeper.ZooKeeperHiveLockManager

hive.lock.mapred.only.operation=false

hive.lock.numretries=100

hive.lock.query.string.max.length=1000000

hive.lock.sleep.between.retries=60s

hive.lockmgr.zookeeper.default.partition.name=__HIVE_DEFAULT_ZOOKEEPER_PARTITION__

hive.log.explain.output=false

hive.map.aggr=true

hive.map.aggr.hash.force.flush.memory.threshold=0.9

hive.map.aggr.hash.min.reduction=0.5

hive.map.aggr.hash.percentmemory=0.5

hive.map.groupby.sorted=false

hive.map.groupby.sorted.testmode=false

hive.mapjoin.bucket.cache.size=100

hive.mapjoin.check.memory.rows=100000

hive.mapjoin.followby.gby.localtask.max.memory.usage=0.55

hive.mapjoin.followby.map.aggr.hash.percentmemory=0.3

hive.mapjoin.localtask.max.memory.usage=0.9

hive.mapjoin.optimized.hashtable=true

hive.mapjoin.optimized.hashtable.wbsize=10485760

hive.mapjoin.smalltable.filesize=25000000

hive.mapper.cannot.span.multiple.partitions=false

hive.mapred.local.mem=0

hive.mapred.mode=nonstrict

hive.mapred.partitioner=org.apache.hadoop.hive.ql.io.DefaultHivePartitioner

hive.mapred.reduce.tasks.speculative.execution=true

hive.mapred.supports.subdirectories=false

hive.merge.mapfiles=true

hive.merge.mapredfiles=false

hive.merge.orcfile.stripe.level=true

hive.merge.rcfile.block.level=true

hive.merge.size.per.task=268435456

hive.merge.smallfiles.avgsize=16777216

hive.merge.sparkfiles=true

hive.merge.tezfiles=false

hive.metadata.move.exported.metadata.to.trash=true

hive.metastore.archive.intermediate.archived=_INTERMEDIATE_ARCHIVED

hive.metastore.archive.intermediate.extracted=_INTERMEDIATE_EXTRACTED

hive.metastore.archive.intermediate.original=_INTERMEDIATE_ORIGINAL

hive.metastore.authorization.storage.checks=false

hive.metastore.batch.retrieve.max=300

hive.metastore.batch.retrieve.table.partition.max=1000

hive.metastore.cache.pinobjtypes=Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order

hive.metastore.client.connect.retry.delay=1s

hive.metastore.client.socket.timeout=300

hive.metastore.connect.retries=3

hive.metastore.direct.sql.batch.size=0

hive.metastore.disallow.incompatible.col.type.changes=false

hive.metastore.dml.events=false

hive.metastore.event.clean.freq=0s

hive.metastore.event.db.listener.timetolive=86400s

hive.metastore.event.expiry.duration=0s

hive.metastore.execute.setugi=true

hive.metastore.expression.proxy=org.apache.hadoop.hive.ql.optimizer.ppr.PartitionExpressionForMetastore

hive.metastore.failure.retries=1

hive.metastore.filter.hook=org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl

hive.metastore.fs.handler.class=org.apache.hadoop.hive.metastore.HiveMetaStoreFsImpl

hive.metastore.fshandler.threads=15

hive.metastore.initial.metadata.count.enabled=true

hive.metastore.integral.jdo.pushdown=false

hive.metastore.kerberos.principal=hive-metastore/_HOST@EXAMPLE.COM

hive.metastore.limit.partition.request=-1

hive.metastore.metrics.enabled=false

hive.metastore.orm.retrieveMapNullsAsEmptyStrings=false

hive.metastore.rawstore.impl=org.apache.hadoop.hive.metastore.ObjectStore

hive.metastore.sasl.enabled=false

hive.metastore.schema.info.class=org.apache.hadoop.hive.metastore.CDHMetaStoreSchemaInfo

hive.metastore.schema.verification=false

hive.metastore.schema.verification.record.version=true

hive.metastore.server.max.message.size=104857600

hive.metastore.server.max.threads=1000

hive.metastore.server.min.threads=200

hive.metastore.server.tcp.keepalive=true

hive.metastore.thrift.compact.protocol.enabled=false

hive.metastore.thrift.framed.transport.enabled=false

hive.metastore.try.direct.sql=true

hive.metastore.try.direct.sql.ddl=true

hive.metastore.uris=thrift://cdh-vm.dbaglobe.com:9083

hive.metastore.use.SSL=false

hive.metastore.warehouse.dir=/user/hive/warehouse

hive.msck.path.validation=throw

hive.msck.repair.batch.size=0

hive.multi.insert.move.tasks.share.dependencies=false

hive.multigroupby.singlereducer=true

hive.mv.files.thread=15

hive.new.job.grouping.set.cardinality=30

hive.optimize.bucketingsorting=true

hive.optimize.bucketmapjoin=false

hive.optimize.bucketmapjoin.sortedmerge=false

hive.optimize.constant.propagation=true

hive.optimize.correlation=false

hive.optimize.distinct.rewrite=true

hive.optimize.groupby=true

hive.optimize.index.autoupdate=false

hive.optimize.index.filter=true

hive.optimize.index.filter.compact.maxsize=-1

hive.optimize.index.filter.compact.minsize=5368709120

hive.optimize.index.groupby=false

hive.optimize.listbucketing=false

hive.optimize.metadataonly=true

hive.optimize.null.scan=true

hive.optimize.ppd=true

hive.optimize.ppd.storage=true

hive.optimize.reducededuplication=true

hive.optimize.reducededuplication.min.reducer=4

hive.optimize.remove.identity.project=true

hive.optimize.sampling.orderby=false

hive.optimize.sampling.orderby.number=1000

hive.optimize.sampling.orderby.percent=0.1

hive.optimize.skewjoin=false

hive.optimize.skewjoin.compiletime=false

hive.optimize.sort.dynamic.partition=false

hive.optimize.union.remove=false

hive.orc.cache.stripe.details.size=10000

hive.orc.compute.splits.num.threads=10

hive.orc.row.index.stride.dictionary.check=true

hive.orc.splits.include.file.footer=false

hive.outerjoin.supports.filters=true

hive.parquet.timestamp.skip.conversion=true

hive.plan.serialization.format=kryo

hive.ppd.recognizetransivity=true

hive.ppd.remove.duplicatefilters=true

hive.prewarm.enabled=false

hive.prewarm.numcontainers=10

hive.prewarm.spark.timeout=5000ms

hive.query.result.fileformat=TextFile

hive.query.timeout.seconds=0s

hive.querylog.enable.plan.progress=true

hive.querylog.location=/tmp/hive

hive.querylog.plan.progress.interval=60000ms

hive.reorder.nway.joins=true

hive.resultset.use.unique.column.names=true

hive.rework.mapredwork=false

hive.rpc.query.plan=false

hive.sample.seednumber=0

hive.scratch.dir.permission=700

hive.scratchdir.lock=false

hive.script.auto.progress=false

hive.script.operator.env.blacklist=hive.txn.valid.txns,hive.script.operator.env.blacklist

hive.script.operator.id.env.var=HIVE_SCRIPT_OPERATOR_ID

hive.script.operator.truncate.env=false

hive.script.recordreader=org.apache.hadoop.hive.ql.exec.TextRecordReader

hive.script.recordwriter=org.apache.hadoop.hive.ql.exec.TextRecordWriter

hive.script.serde=org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

hive.security.authenticator.manager=org.apache.hadoop.hive.ql.security.HadoopDefaultAuthenticator

hive.security.authorization.enabled=false

hive.security.authorization.manager=org.apache.hadoop.hive.ql.security.authorization.DefaultHiveAuthorizationProvider

hive.security.authorization.sqlstd.confwhitelist=hive\.auto\..*|hive\.cbo\..*|hive\.convert\..*|hive\.exec\.dynamic\.partition.*|hive\.exec\..*\.dynamic\.partitions\..*|hive\.exec\.compress\..*|hive\.exec\.infer\..*|hive\.exec\.mode.local\..*|hive\.exec\.orc\..*|hive\.fetch.task\..*|hive\.hbase\..*|hive\.index\..*|hive\.index\..*|hive\.intermediate\..*|hive\.join\..*|hive\.limit\..*|hive\.mapjoin\..*|hive\.merge\..*|hive\.optimize\..*|hive\.orc\..*|hive\.outerjoin\..*|hive\.ppd\..*|hive\.prewarm\..*|hive\.skewjoin\..*|hive\.smbjoin\..*|hive\.stats\..*|hive\.tez\..*|hive\.vectorized\..*|mapred\.map\..*|mapred\.reduce\..*|mapred\.output\.compression\.codec|mapreduce\.job\.reduce\.slowstart\.completedmaps|mapreduce\.job\.queuename|mapreduce\.input\.fileinputformat\.split\.minsize|mapreduce\.map\..*|mapreduce\.reduce\..*|tez\.am\..*|tez\.task\..*|tez\.runtime\..*|hive\.exec\.reducers\.bytes\.per\.reducer|hive\.client\.stats\.counters|hive\.exec\.default\.partition\.name|hive\.exec\.drop\.ignorenonexistent|hive\.counters\.group\.name|hive\.enforce\.bucketing|hive\.enforce\.bucketmapjoin|hive\.enforce\.sorting|hive\.enforce\.sortmergebucketmapjoin|hive\.cache\.expr\.evaluation|hive\.groupby\.skewindata|hive\.hashtable\.loadfactor|hive\.hashtable\.initialCapacity|hive\.ignore\.mapjoin\.hint|hive\.limit\.row\.max\.size|hive\.mapred\.mode|hive\.map\.aggr|hive\.compute\.query\.using\.stats|hive\.exec\.rowoffset|hive\.variable\.substitute|hive\.variable\.substitute\.depth|hive\.autogen\.columnalias\.prefix\.includefuncname|hive\.autogen\.columnalias\.prefix\.label|hive\.exec\.check\.crossproducts|hive\.compat|hive\.exec\.concatenate\.check\.index|hive\.display\.partition\.cols\.separately|hive\.error\.on\.empty\.partition|hive\.execution\.engine|hive\.exim\.uri\.scheme\.whitelist|hive\.file\.max\.footer|hive\.mapred\.supports\.subdirectories|hive\.insert\.into\.multilevel\.dirs|hive\.localize\.resource\.num\.wait\.attempts|hive\.multi\.insert\.move\.tasks\.share\.dependencies|hive\.support\.quoted\.identifiers|hive\.resultset\.use\.unique\.column\.names|hive\.analyze\.stmt\.collect\.partlevel\.stats|hive\.exec\.job\.debug\.capture\.stacktraces|hive\.exec\.job\.debug\.timeout|hive\.exec\.max\.created\.files|hive\.exec\.reducers\.max|hive\.output\.file\.extension|hive\.exec\.show\.job\.failure\.debug\.info|hive\.exec\.tasklog\.debug\.timeout|hive\.query\.id

hive.security.authorization.task.factory=org.apache.hadoop.hive.ql.parse.authorization.RestrictedHiveAuthorizationTaskFactoryImpl

hive.security.command.whitelist=set,reset,dfs,add,list,delete,reload,compile

hive.security.metastore.authenticator.manager=org.apache.hadoop.hive.ql.security.HadoopDefaultMetastoreAuthenticator

hive.security.metastore.authorization.auth.reads=true

hive.security.metastore.authorization.manager=org.apache.hadoop.hive.ql.security.authorization.DefaultHiveMetastoreAuthorizationProvider

hive.serdes.using.metastore.for.schema=org.apache.hadoop.hive.ql.io.orc.OrcSerde,org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe,org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe,org.apache.hadoop.hive.serde2.dynamic_type.DynamicSerDe,org.apache.hadoop.hive.serde2.MetadataTypedColumnsetSerDe,org.apache.hadoop.hive.serde2.columnar.LazyBinaryColumnarSerDe,org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe,org.apache.hadoop.hive.serde2.lazybinary.LazyBinarySerDe

hive.server.read.socket.timeout=10s

hive.server.tcp.keepalive=true

hive.server2.allow.user.substitution=true

hive.server2.async.exec.async.compile=false

hive.server2.async.exec.keepalive.time=10s

hive.server2.async.exec.shutdown.timeout=10s

hive.server2.async.exec.threads=100

hive.server2.async.exec.wait.queue.size=100

hive.server2.authentication=NONE

hive.server2.authentication.ldap.groupClassKey=groupOfNames

hive.server2.authentication.ldap.groupMembershipKey=member

hive.server2.authentication.ldap.guidKey=uid

hive.server2.clear.dangling.scratchdir=false

hive.server2.clear.dangling.scratchdir.interval=1800s

hive.server2.compile.lock.timeout=0s

hive.server2.enable.doAs=true

hive.server2.global.init.file.location=/run/cloudera-scm-agent/process/385-hive-HIVESERVER2

hive.server2.idle.operation.timeout=21600000

hive.server2.idle.session.check.operation=false

hive.server2.idle.session.timeout=43200000

hive.server2.idle.session.timeout_check_operation=true

hive.server2.logging.operation.enabled=true

hive.server2.logging.operation.level=EXECUTION

hive.server2.logging.operation.log.location=/var/log/hive/operation_logs

hive.server2.long.polling.timeout=5000ms

hive.server2.map.fair.scheduler.queue=true

hive.server2.max.start.attempts=30

hive.server2.metrics.enabled=true

hive.server2.session.check.interval=900000

hive.server2.sleep.interval.between.start.attempts=60s

hive.server2.support.dynamic.service.discovery=false

hive.server2.table.type.mapping=CLASSIC

hive.server2.tez.initialize.default.sessions=false

hive.server2.tez.sessions.per.default.queue=1

hive.server2.thrift.bind.host=cdh-vm.dbaglobe.com

hive.server2.thrift.exponential.backoff.slot.length=100ms

hive.server2.thrift.http.max.idle.time=1800s

hive.server2.thrift.http.max.worker.threads=500

hive.server2.thrift.http.min.worker.threads=5

hive.server2.thrift.http.path=cliservice

hive.server2.thrift.http.port=10001

hive.server2.thrift.http.worker.keepalive.time=60s

hive.server2.thrift.login.timeout=20s

hive.server2.thrift.max.message.size=104857600

hive.server2.thrift.max.worker.threads=100

hive.server2.thrift.min.worker.threads=5

hive.server2.thrift.port=10000

hive.server2.thrift.sasl.qop=auth

hive.server2.thrift.worker.keepalive.time=60s

hive.server2.transport.mode=binary

hive.server2.use.SSL=false

hive.server2.webui.host=0.0.0.0

hive.server2.webui.max.historic.queries=25

hive.server2.webui.max.threads=50

hive.server2.webui.port=10002

hive.server2.webui.spnego.principal=HTTP/_HOST@EXAMPLE.COM

hive.server2.webui.use.spnego=false

hive.server2.webui.use.ssl=false

hive.server2.zookeeper.namespace=hiveserver2

hive.service.metrics.class=org.apache.hadoop.hive.common.metrics.metrics2.CodahaleMetrics

hive.service.metrics.file.frequency=30000

hive.service.metrics.file.location=/var/log/hive/metrics-hiveserver2/metrics.log

hive.service.metrics.reporter=JSON_FILE, JMX

hive.session.history.enabled=false

hive.session.id=d4b067f0-b697-48ff-8223-3f4b527f090c

hive.session.silent=false

hive.skewjoin.key=100000

hive.skewjoin.mapjoin.map.tasks=10000

hive.skewjoin.mapjoin.min.split=33554432

hive.smbjoin.cache.rows=10000

hive.spark.client.connect.timeout=1000ms

hive.spark.client.future.timeout=60s

hive.spark.client.rpc.max.size=52428800

hive.spark.client.rpc.sasl.mechanisms=DIGEST-MD5

hive.spark.client.rpc.threads=8

hive.spark.client.secret.bits=256

hive.spark.client.server.connect.timeout=90000ms

hive.spark.dynamic.partition.pruning=false

hive.spark.dynamic.partition.pruning.map.join.only=false

hive.spark.dynamic.partition.pruning.max.data.size=104857600

hive.spark.job.monitor.timeout=60s

hive.ssl.protocol.blacklist=SSLv2,SSLv3

hive.stageid.rearrange=none

hive.start.cleanup.scratchdir=false

hive.stats.atomic=false

hive.stats.autogather=true

hive.stats.collect.rawdatasize=true

hive.stats.collect.scancols=true

hive.stats.collect.tablekeys=false

hive.stats.dbclass=fs

hive.stats.dbconnectionstring=jdbc:derby:;databaseName=TempStatsStore;create=true

hive.stats.deserialization.factor=1.0

hive.stats.fetch.column.stats=true

hive.stats.fetch.partition.stats=true

hive.stats.gather.num.threads=10

hive.stats.jdbc.timeout=30s

hive.stats.jdbcdriver=org.apache.derby.jdbc.EmbeddedDriver

hive.stats.join.factor=1.1

hive.stats.key.prefix.max.length=150

hive.stats.key.prefix.reserve.length=24

hive.stats.list.num.entries=10

hive.stats.map.num.entries=10

hive.stats.max.variable.length=100

hive.stats.ndv.error=20.0

hive.stats.reliable=false

hive.stats.retries.max=0

hive.stats.retries.wait=3000ms

hive.support.concurrency=true

hive.support.quoted.identifiers=column

hive.test.authz.sstd.hs2.mode=false

hive.test.mode=false

hive.test.mode.prefix=test_

hive.test.mode.samplefreq=32

hive.tez.auto.reducer.parallelism=false

hive.tez.container.size=-1

hive.tez.cpu.vcores=-1

hive.tez.dynamic.partition.pruning=true

hive.tez.dynamic.partition.pruning.max.data.size=104857600

hive.tez.dynamic.partition.pruning.max.event.size=1048576

hive.tez.exec.inplace.progress=true

hive.tez.exec.print.summary=false

hive.tez.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat

hive.tez.log.level=INFO

hive.tez.max.partition.factor=2.0

hive.tez.min.partition.factor=0.25

hive.tez.smb.number.waves=0.5

hive.transform.escape.input=false

hive.txn.manager=org.apache.hadoop.hive.ql.lockmgr.DummyTxnManager

hive.txn.max.open.batch=1000

hive.txn.timeout=300s

hive.typecheck.on.insert=true

hive.udtf.auto.progress=false

hive.unlock.numretries=10

hive.user.install.directory=hdfs:///user/

hive.variable.substitute=true

hive.variable.substitute.depth=40

hive.vectorized.execution.enabled=true

hive.vectorized.execution.reduce.enabled=false

hive.vectorized.execution.reduce.groupby.enabled=true

hive.vectorized.groupby.checkinterval=4096

hive.vectorized.groupby.flush.percent=0.1

hive.vectorized.groupby.maxentries=1000000

hive.warehouse.subdir.inherit.perms=true

hive.zookeeper.clean.extra.nodes=false

hive.zookeeper.client.port=2181

hive.zookeeper.connection.basesleeptime=1000ms

hive.zookeeper.connection.max.retries=3

hive.zookeeper.namespace=hive_zookeeper_namespace_hive

hive.zookeeper.quorum=cdh-vm.dbaglobe.com

hive.zookeeper.session.timeout=1200000ms

javax.jdo.PersistenceManagerFactoryClass=org.datanucleus.api.jdo.JDOPersistenceManagerFactory

javax.jdo.option.ConnectionDriverName=org.apache.derby.jdbc.EmbeddedDriver

javax.jdo.option.ConnectionURL=jdbc:derby:;databaseName=metastore_db;create=true

javax.jdo.option.ConnectionUserName=APP

javax.jdo.option.DetachAllOnCommit=true

javax.jdo.option.Multithreaded=true

javax.jdo.option.NonTransactionalRead=true

mapreduce.input.fileinputformat.input.dir.recursive=false

mapreduce.input.fileinputformat.split.maxsize=256000000

mapreduce.input.fileinputformat.split.minsize=1

mapreduce.input.fileinputformat.split.minsize.per.node=1

mapreduce.input.fileinputformat.split.minsize.per.rack=1

mapreduce.job.committer.setup.cleanup.needed=false

mapreduce.job.committer.task.cleanup.needed=false

mapreduce.job.reduces=-1

mapreduce.reduce.speculative=true

output.formatter=org.apache.hadoop.hive.ql.exec.FetchFormatter$ThriftFormatter

output.protocol=6

parquet.memory.pool.ratio=0.5

rpc.engine.org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolPB=org.apache.hadoop.ipc.ProtobufRpcEngine

silent=off

spark.driver.memory=800000000

spark.dynamicAllocation.enabled=true

spark.dynamicAllocation.initialExecutors=1

spark.dynamicAllocation.maxExecutors=2147483647

spark.dynamicAllocation.minExecutors=1

spark.executor.cores=4

spark.executor.memory=1500000000

spark.master=yarn-cluster

spark.shuffle.service.enabled=true

spark.yarn.driver.memoryOverhead=102

spark.yarn.executor.memoryOverhead=614

startcode=1519649735704

stream.stderr.reporter.enabled=true

stream.stderr.reporter.prefix=reporter:

yarn.bin.path=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop/bin/yarn

env:CDH_AVRO_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/avro

env:CDH_CRUNCH_HOME=/usr/lib/crunch

env:CDH_FLUME_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/flume-ng

env:CDH_HADOOP_BIN=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop/bin/hadoop

env:CDH_HADOOP_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop

env:CDH_HBASE_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hbase

env:CDH_HBASE_INDEXER_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hbase-solr

env:CDH_HCAT_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hive-hcatalog

env:CDH_HDFS_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-hdfs

env:CDH_HIVE_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hive

env:CDH_HTTPFS_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-httpfs

env:CDH_HUE_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hue

env:CDH_HUE_PLUGINS_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop

env:CDH_IMPALA_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/impala

env:CDH_KAFKA_HOME=/usr/lib/kafka

env:CDH_KMS_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-kms

env:CDH_KUDU_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/kudu

env:CDH_LLAMA_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/llama

env:CDH_MR1_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-0.20-mapreduce

env:CDH_MR2_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-mapreduce

env:CDH_OOZIE_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/oozie

env:CDH_PARQUET_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/parquet

env:CDH_PIG_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/pig

env:CDH_SENTRY_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/sentry

env:CDH_SOLR_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/solr

env:CDH_SPARK_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/spark

env:CDH_SQOOP2_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/sqoop2

env:CDH_SQOOP_HOME=/usr/lib/sqoop

env:CDH_VERSION=5

env:CDH_YARN_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-yarn

env:CDH_ZOOKEEPER_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/zookeeper

env:CGROUP_GROUP_BLKIO=

env:CGROUP_GROUP_CPU=

env:CGROUP_GROUP_CPUACCT=

env:CGROUP_GROUP_MEMORY=

env:CGROUP_ROOT_BLKIO=/sys/fs/cgroup/blkio

env:CGROUP_ROOT_CPU=/sys/fs/cgroup/cpu,cpuacct

env:CGROUP_ROOT_CPUACCT=/sys/fs/cgroup/cpu,cpuacct

env:CGROUP_ROOT_MEMORY=/sys/fs/cgroup/memory

env:CLOUDERA_MYSQL_CONNECTOR_JAR=/usr/share/java/mysql-connector-java.jar

env:CLOUDERA_ORACLE_CONNECTOR_JAR=/usr/share/java/oracle-connector-java.jar

env:CLOUDERA_POSTGRESQL_JDBC_JAR=/usr/share/cmf/lib/postgresql-9.0-801.jdbc4.jar

env:CMF_CONF_DIR=/etc/cloudera-scm-agent

env:CMF_PACKAGE_DIR=/usr/lib64/cmf/service

env:CM_ADD_TO_CP_DIRS=navigator/cdh57

env:CM_STATUS_CODES=STATUS_NONE HDFS_DFS_DIR_NOT_EMPTY HBASE_TABLE_DISABLED HBASE_TABLE_ENABLED JOBTRACKER_IN_STANDBY_MODE YARN_RM_IN_STANDBY_MODE

env:CONF_DIR=/run/cloudera-scm-agent/process/385-hive-HIVESERVER2

env:HADOOP_CLIENT_OPTS=-Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Xms4294967296 -Xmx4294967296 -XX:MaxPermSize=512M -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp/hive_hive-HIVESERVER2-f2ddc1ecbff0faafbcffe8ebebc13cb1_pid9057.hprof -XX:OnOutOfMemoryError=/usr/lib64/cmf/service/common/killparent.sh

env:HADOOP_CONF_DIR=/run/cloudera-scm-agent/process/385-hive-HIVESERVER2/yarn-conf

env:HADOOP_HEAPSIZE=256

env:HADOOP_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop

env:HADOOP_HOME_WARN_SUPPRESS=true

env:HADOOP_MAPRED_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop-mapreduce

env:HADOOP_PREFIX=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop

env:HIVE_AUX_JARS_PATH=

env:HIVE_CONF_DIR=/run/cloudera-scm-agent/process/385-hive-HIVESERVER2

env:HIVE_DEFAULT_XML=/etc/hive/conf.dist/hive-default.xml

env:HIVE_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hive

env:HIVE_LOGFILE=hadoop-cmf-hive-HIVESERVER2-cdh-vm.dbaglobe.com.log.out

env:HIVE_LOG_DIR=/var/log/hive

env:HIVE_METASTORE_DATABASE_TYPE=mysql

env:HIVE_ROOT_LOGGER=ERROR,RFA

env:HOME=/var/lib/hive

env:JAVA_HOME=/usr/java/jdk1.8.0_162

env:JSVC_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/bigtop-utils

env:KEYTRUSTEE_KP_HOME=/usr/share/keytrustee-keyprovider

env:KEYTRUSTEE_SERVER_HOME=/usr/lib/keytrustee-server

env:LANG=en_US.UTF-8

env:LD_LIBRARY_PATH=:/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop/lib/native

env:MALLOC_ARENA_MAX=4

env:MGMT_HOME=/usr/share/cmf

env:ORACLE_HOME=/usr/share/oracle/instantclient

env:PARCELS_ROOT=/opt/cloudera/parcels

env:PARCEL_DIRNAMES=CDH-5.14.0-1.cdh5.14.0.p0.24

env:PATH=/sbin:/usr/sbin:/bin:/usr/bin

env:PWD=/run/cloudera-scm-agent/process/385-hive-HIVESERVER2

env:SCM_DEFINES_SCRIPTS=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/meta/cdh_env.sh

env:SEARCH_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/search

env:SENTRY_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/sentry

env:SERVICE_LIST=beeline cleardanglingscratchdir cli help hiveburninclient hiveserver2 hiveserver hwi jar lineage metastore metatool orcfiledump rcfilecat schemaTool version

env:SHELL=/bin/bash

env:SHLVL=2

env:SPARK_CONF_DIR=/etc/spark/conf

env:SPARK_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/spark

env:SPARK_ON_YARN=true

env:SUPERVISOR_ENABLED=1

env:SUPERVISOR_GROUP_NAME=385-hive-HIVESERVER2

env:SUPERVISOR_PROCESS_NAME=385-hive-HIVESERVER2

env:SUPERVISOR_SERVER_URL=unix:///run/cloudera-scm-agent/supervisor/supervisord.sock

env:TOMCAT_HOME=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/bigtop-tomcat

env:WEBHCAT_DEFAULT_XML=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/etc/hive-webhcat/conf.dist/webhcat-default.xml

env:XDG_RUNTIME_DIR=/run/user/0

env:XDG_SESSION_ID=c2

env:YARN_OPTS=-Xmx825955249 -Djava.net.preferIPv4Stack=true

system:awt.toolkit=sun.awt.X11.XToolkit

system:file.encoding=UTF-8

system:file.encoding.pkg=sun.io

system:file.separator=/

system:hadoop.home.dir=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop

system:hadoop.id.str=

system:hadoop.log.dir=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop/logs

system:hadoop.log.file=hadoop.log

system:hadoop.policy.file=hadoop-policy.xml

system:hadoop.root.logger=INFO,console

system:hadoop.security.logger=INFO,NullAppender

system:java.awt.graphicsenv=sun.awt.X11GraphicsEnvironment

system:java.awt.printerjob=sun.print.PSPrinterJob

system:java.class.version=52.0

system:java.endorsed.dirs=/usr/java/jdk1.8.0_162/jre/lib/endorsed

system:java.ext.dirs=/usr/java/jdk1.8.0_162/jre/lib/ext:/usr/java/packages/lib/ext

system:java.home=/usr/java/jdk1.8.0_162/jre

system:java.io.tmpdir=/tmp

system:java.library.path=/opt/cloudera/parcels/CDH-5.14.0-1.cdh5.14.0.p0.24/lib/hadoop/lib/native

system:java.net.preferIPv4Stack=true

system:java.runtime.name=Java(TM) SE Runtime Environment

system:java.runtime.version=1.8.0_162-b12

system:java.specification.name=Java Platform API Specification

system:java.specification.vendor=Oracle Corporation

system:java.specification.version=1.8

system:java.vendor=Oracle Corporation

system:java.vendor.url=http://java.oracle.com/

system:java.vendor.url.bug=http://bugreport.sun.com/bugreport/

system:java.version=1.8.0_162

system:java.vm.info=mixed mode

system:java.vm.name=Java HotSpot(TM) 64-Bit Server VM

system:java.vm.specification.name=Java Virtual Machine Specification

system:java.vm.specification.vendor=Oracle Corporation

system:java.vm.specification.version=1.8

system:java.vm.vendor=Oracle Corporation

system:java.vm.version=25.162-b12

system:line.separator=

system:os.arch=amd64

system:os.name=Linux

system:os.version=3.10.0-693.17.1.el7.x86_64

system:path.separator=:

system:sun.arch.data.model=64

system:sun.boot.class.path=/usr/java/jdk1.8.0_162/jre/lib/resources.jar:/usr/java/jdk1.8.0_162/jre/lib/rt.jar:/usr/java/jdk1.8.0_162/jre/lib/sunrsasign.jar:/usr/java/jdk1.8.0_162/jre/lib/jsse.jar:/usr/java/jdk1.8.0_162/jre/lib/jce.jar:/usr/java/jdk1.8.0_162/jre/lib/charsets.jar:/usr/java/jdk1.8.0_162/jre/lib/jfr.jar:/usr/java/jdk1.8.0_162/jre/classes

system:sun.boot.library.path=/usr/java/jdk1.8.0_162/jre/lib/amd64

system:sun.cpu.endian=little

system:sun.cpu.isalist=

system:sun.io.unicode.encoding=UnicodeLittle

system:sun.java.launcher=SUN_STANDARD

system:sun.jnu.encoding=UTF-8

system:sun.management.compiler=HotSpot 64-Bit Tiered Compilers

system:sun.os.patch.level=unknown

system:user.country=US

system:user.dir=/run/cloudera-scm-agent/process/385-hive-HIVESERVER2

system:user.home=/var/lib/hive

system:user.language=en

system:user.name=hive

system:user.timezone=Asia/Singapore

682 rows selected (0.166 seconds)

Closing: 0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/test

0: jdbc:hive2://cdh-vm.dbaglobe.com:10000/tes>

[donghua@cdh-vm scripts]$

Subscribe to:

Comments (Atom)