[donghua@localhost ~]$ spark-submit --master yarn-master speedByDay.py /user/donghua/IOTDataDemo.csv

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/flume-ng/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/parquet/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/avro/avro-tools-1.7.6-cdh5.13.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/12/02 03:44:04 INFO spark.SparkContext: Running Spark version 1.6.0

17/12/02 03:44:05 WARN util.Utils: Your hostname, localhost.localdomain resolves to a loopback address: 127.0.0.1; using 192.168.56.101 instead (on interface enp0s8)

17/12/02 03:44:05 WARN util.Utils: Set SPARK_LOCAL_IP if you need to bind to another address

17/12/02 03:44:05 INFO spark.SecurityManager: Changing view acls to: donghua

17/12/02 03:44:05 INFO spark.SecurityManager: Changing modify acls to: donghua

17/12/02 03:44:05 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(donghua); users with modify permissions: Set(donghua)

17/12/02 03:44:05 INFO util.Utils: Successfully started service 'sparkDriver' on port 40005.

17/12/02 03:44:06 INFO slf4j.Slf4jLogger: Slf4jLogger started

17/12/02 03:44:06 INFO Remoting: Starting remoting

17/12/02 03:44:06 INFO util.Utils: Successfully started service 'sparkDriverActorSystem' on port 40551.

17/12/02 03:44:06 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@192.168.56.101:40551]

17/12/02 03:44:06 INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkDriverActorSystem@192.168.56.101:40551]

17/12/02 03:44:06 INFO spark.SparkEnv: Registering MapOutputTracker

17/12/02 03:44:06 INFO spark.SparkEnv: Registering BlockManagerMaster

17/12/02 03:44:06 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-7bc45b99-8168-43af-bd94-e84fad6e1a96

17/12/02 03:44:06 INFO storage.MemoryStore: MemoryStore started with capacity 534.5 MB

17/12/02 03:44:06 INFO spark.SparkEnv: Registering OutputCommitCoordinator

17/12/02 03:44:07 INFO server.Server: jetty-8.y.z-SNAPSHOT

17/12/02 03:44:07 INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:4040

17/12/02 03:44:07 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

17/12/02 03:44:07 INFO ui.SparkUI: Started SparkUI at http://192.168.56.101:4040

17/12/02 03:44:07 INFO client.RMProxy: Connecting to ResourceManager at cdh-vm.dbaglobe.com/192.168.56.10:8032

17/12/02 03:44:08 INFO yarn.Client: Requesting a new application from cluster with 1 NodeManagers

17/12/02 03:44:08 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (1536 MB per container)

17/12/02 03:44:08 INFO yarn.Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

17/12/02 03:44:08 INFO yarn.Client: Setting up container launch context for our AM

17/12/02 03:44:08 INFO yarn.Client: Setting up the launch environment for our AM container

17/12/02 03:44:08 INFO yarn.Client: Preparing resources for our AM container

17/12/02 03:44:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/12/02 03:44:10 INFO yarn.YarnSparkHadoopUtil: getting token for namenode: hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0021

17/12/02 03:44:10 INFO hdfs.DFSClient: Created token for donghua: HDFS_DELEGATION_TOKEN owner=donghua@DBAGLOBE.COM, renewer=yarn, realUser=, issueDate=1512204250136, maxDate=1512809050136, sequenceNumber=87, masterKeyId=6 on 192.168.56.10:8020

17/12/02 03:44:12 INFO hive.metastore: Trying to connect to metastore with URI thrift://cdh-vm.dbaglobe.com:9083

17/12/02 03:44:12 INFO hive.metastore: Opened a connection to metastore, current connections: 1

17/12/02 03:44:12 INFO hive.metastore: Connected to metastore.

17/12/02 03:44:12 INFO hive.metastore: Closed a connection to metastore, current connections: 0

17/12/02 03:44:12 INFO yarn.YarnSparkHadoopUtil: HBase class not found java.lang.ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration

17/12/02 03:44:12 INFO yarn.Client: Uploading resource file:/usr/lib/spark/lib/spark-assembly-1.6.0-cdh5.13.0-hadoop2.6.0-cdh5.13.0.jar -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0021/spark-assembly-1.6.0-cdh5.13.0-hadoop2.6.0-cdh5.13.0.jar

17/12/02 03:44:14 INFO yarn.Client: Uploading resource file:/usr/lib/spark/python/lib/pyspark.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0021/pyspark.zip

17/12/02 03:44:14 INFO yarn.Client: Uploading resource file:/usr/lib/spark/python/lib/py4j-0.9-src.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0021/py4j-0.9-src.zip

17/12/02 03:44:14 INFO yarn.Client: Uploading resource file:/tmp/spark-12699e09-3871-4a0b-9a7f-af3bcd3de716/__spark_conf__7756289733249281889.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0021/__spark_conf__7756289733249281889.zip

17/12/02 03:44:14 INFO spark.SecurityManager: Changing view acls to: donghua

17/12/02 03:44:14 INFO spark.SecurityManager: Changing modify acls to: donghua

17/12/02 03:44:14 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(donghua); users with modify permissions: Set(donghua)

17/12/02 03:44:14 INFO yarn.Client: Submitting application 21 to ResourceManager

17/12/02 03:44:14 INFO impl.YarnClientImpl: Submitted application application_1512169991692_0021

17/12/02 03:44:15 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:44:15 INFO yarn.Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.donghua

start time: 1512204254781

final status: UNDEFINED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0021/

user: donghua

17/12/02 03:44:16 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:44:17 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:44:18 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:44:19 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:44:20 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

<omitted repeating message….>

17/12/02 03:47:43 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:47:44 INFO yarn.Client: Application report for application_1512169991692_0021 (state: ACCEPTED)

17/12/02 03:47:45 INFO yarn.Client: Application report for application_1512169991692_0021 (state: FAILED)

17/12/02 03:47:45 INFO yarn.Client:

client token: N/A

diagnostics: Application application_1512169991692_0021 failed 2 times due to AM Container for appattempt_1512169991692_0021_000002 exited with exitCode: 10

For more detailed output, check application tracking page:http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0021/Then, click on links to logs of each attempt.

Diagnostics: Exception from container-launch.

Container id: container_1512169991692_0021_02_000001

Exit code: 10

Stack trace: ExitCodeException exitCode=10:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:604)

at org.apache.hadoop.util.Shell.run(Shell.java:507)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:789)

at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.launchContainer(LinuxContainerExecutor.java:373)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Shell output: main : command provided 1

main : run as user is donghua

main : requested yarn user is donghua

Writing to tmp file /yarn/nm/nmPrivate/application_1512169991692_0021/container_1512169991692_0021_02_000001/container_1512169991692_0021_02_000001.pid.tmp

Container exited with a non-zero exit code 10

Failing this attempt. Failing the application.

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.donghua

start time: 1512204254781

final status: FAILED

tracking URL: http://cdh-vm.dbaglobe.com:8088/cluster/app/application_1512169991692_0021

user: donghua

17/12/02 03:47:45 ERROR spark.SparkContext: Error initializing SparkContext.

org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:124)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:64)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:151)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:538)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:59)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:234)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:381)

at py4j.Gateway.invoke(Gateway.java:214)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:79)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:68)

at py4j.GatewayConnection.run(GatewayConnection.java:209)

at java.lang.Thread.run(Thread.java:748)

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/kill,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/api,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/static,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/rdd/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/rdd,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/json,null}

17/12/02 03:47:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs,null}

17/12/02 03:47:45 INFO ui.SparkUI: Stopped Spark web UI at http://192.168.56.101:4040

17/12/02 03:47:45 WARN cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

17/12/02 03:47:45 INFO cluster.YarnClientSchedulerBackend: Shutting down all executors

17/12/02 03:47:45 INFO cluster.YarnClientSchedulerBackend: Asking each executor to shut down

17/12/02 03:47:45 INFO cluster.YarnClientSchedulerBackend: Stopped

17/12/02 03:47:45 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

17/12/02 03:47:45 INFO storage.MemoryStore: MemoryStore cleared

17/12/02 03:47:45 INFO storage.BlockManager: BlockManager stopped

17/12/02 03:47:45 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

17/12/02 03:47:45 WARN metrics.MetricsSystem: Stopping a MetricsSystem that is not running

17/12/02 03:47:45 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

17/12/02 03:47:45 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

17/12/02 03:47:45 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

17/12/02 03:47:45 INFO spark.SparkContext: Successfully stopped SparkContext

Traceback (most recent call last):

File "/home/donghua/speedByDay.py", line 11, in <module>

sc = SparkContext()

File "/usr/lib/spark/python/lib/pyspark.zip/pyspark/context.py", line 115, in __init__

File "/usr/lib/spark/python/lib/pyspark.zip/pyspark/context.py", line 172, in _do_init

File "/usr/lib/spark/python/lib/pyspark.zip/pyspark/context.py", line 235, in _initialize_context

File "/usr/lib/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway.py", line 1064, in __call__

File "/usr/lib/spark/python/lib/py4j-0.9-src.zip/py4j/protocol.py", line 308, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext.

: org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:124)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:64)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:151)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:538)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:59)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:234)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:381)

at py4j.Gateway.invoke(Gateway.java:214)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:79)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:68)

at py4j.GatewayConnection.run(GatewayConnection.java:209)

at java.lang.Thread.run(Thread.java:748)

17/12/02 03:47:45 INFO util.ShutdownHookManager: Shutdown hook called

17/12/02 03:47:45 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-12699e09-3871-4a0b-9a7f-af3bcd3de716

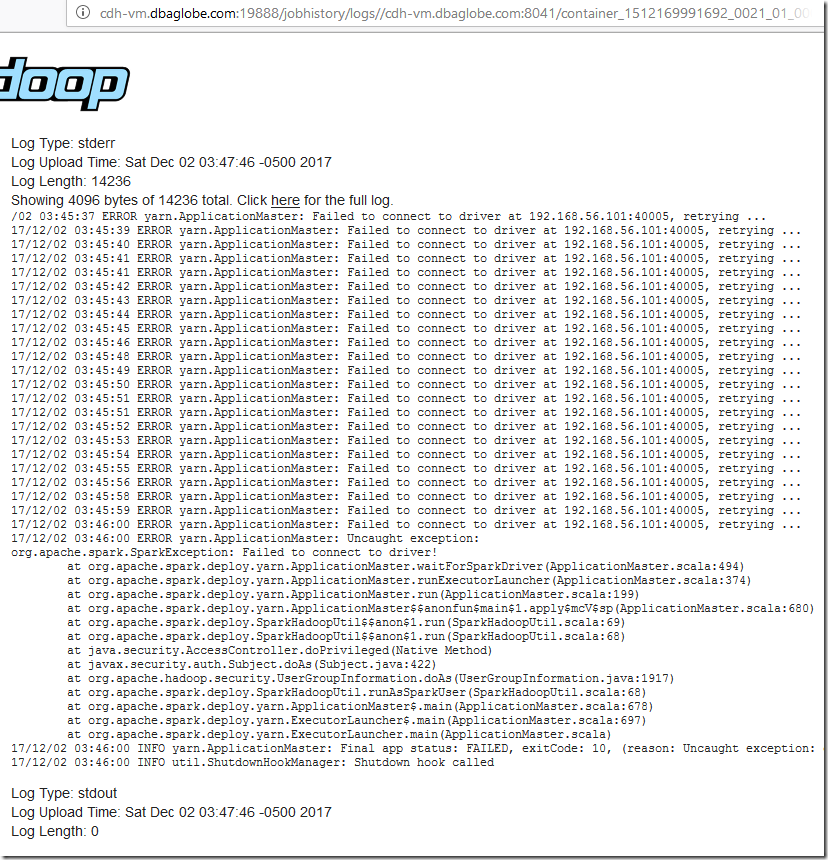

Use the tracking URL to confirm the error due to blocked by firewall port:

Clear picture explanation here: https://www.ibm.com/support/knowledgecenter/en/SSCTFE_1.1.0/com.ibm.azk.v1r1.azka100/topics/azkic_t_confignetwork.htm

Solution 1: use Hadoop Edge node to submit the spark job.

Solution 2: use cluster deployment mode instead of client, this will launch spark driver on one of the worker machines inside the cluster

[donghua@localhost ~]$ spark-submit --master yarn-master --deploy-mode cluster speedByDay.py /user/donghua/IOTDataDemo.csv

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/flume-ng/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/parquet/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/avro/avro-tools-1.7.6-cdh5.13.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/12/02 08:13:17 INFO client.RMProxy: Connecting to ResourceManager at cdh-vm.dbaglobe.com/192.168.56.10:8032

17/12/02 08:13:18 INFO yarn.Client: Requesting a new application from cluster with 1 NodeManagers

17/12/02 08:13:18 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (1536 MB per container)

17/12/02 08:13:18 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

17/12/02 08:13:18 INFO yarn.Client: Setting up container launch context for our AM

17/12/02 08:13:18 INFO yarn.Client: Setting up the launch environment for our AM container

17/12/02 08:13:18 INFO yarn.Client: Preparing resources for our AM container

17/12/02 08:13:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/12/02 08:13:19 INFO yarn.YarnSparkHadoopUtil: getting token for namenode: hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0025

17/12/02 08:13:19 INFO hdfs.DFSClient: Created token for donghua: HDFS_DELEGATION_TOKEN owner=donghua@DBAGLOBE.COM, renewer=yarn, realUser=, issueDate=1512220399829, maxDate=1512825199829, sequenceNumber=93, masterKeyId=6 on 192.168.56.10:8020

17/12/02 08:13:22 INFO hive.metastore: Trying to connect to metastore with URI thrift://cdh-vm.dbaglobe.com:9083

17/12/02 08:13:22 INFO hive.metastore: Opened a connection to metastore, current connections: 1

17/12/02 08:13:22 INFO hive.metastore: Connected to metastore.

17/12/02 08:13:22 INFO hive.metastore: Closed a connection to metastore, current connections: 0

17/12/02 08:13:22 INFO yarn.YarnSparkHadoopUtil: HBase class not found java.lang.ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration

17/12/02 08:13:22 INFO yarn.Client: Uploading resource file:/usr/lib/spark/lib/spark-assembly-1.6.0-cdh5.13.0-hadoop2.6.0-cdh5.13.0.jar -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0025/spark-assembly-1.6.0-cdh5.13.0-hadoop2.6.0-cdh5.13.0.jar

17/12/02 08:13:24 INFO yarn.Client: Uploading resource file:/home/donghua/speedByDay.py -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0025/speedByDay.py

17/12/02 08:13:24 INFO yarn.Client: Uploading resource file:/usr/lib/spark/python/lib/pyspark.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0025/pyspark.zip

17/12/02 08:13:24 INFO yarn.Client: Uploading resource file:/usr/lib/spark/python/lib/py4j-0.9-src.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0025/py4j-0.9-src.zip

17/12/02 08:13:24 INFO yarn.Client: Uploading resource file:/tmp/spark-4ebbd7d4-e821-4fb7-b0cc-43e574c9f682/__spark_conf__1334237190391243174.zip -> hdfs://cdh-vm.dbaglobe.com:8020/user/donghua/.sparkStaging/application_1512169991692_0025/__spark_conf__1334237190391243174.zip

17/12/02 08:13:24 INFO spark.SecurityManager: Changing view acls to: donghua

17/12/02 08:13:24 INFO spark.SecurityManager: Changing modify acls to: donghua

17/12/02 08:13:24 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(donghua); users with modify permissions: Set(donghua)

17/12/02 08:13:24 INFO yarn.Client: Submitting application 25 to ResourceManager

17/12/02 08:13:24 INFO impl.YarnClientImpl: Submitted application application_1512169991692_0025

17/12/02 08:13:25 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:25 INFO yarn.Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.donghua

start time: 1512220404650

final status: UNDEFINED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0025/

user: donghua

17/12/02 08:13:26 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:27 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:28 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:29 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:30 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:31 INFO yarn.Client: Application report for application_1512169991692_0025 (state: ACCEPTED)

17/12/02 08:13:32 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:32 INFO yarn.Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: 192.168.56.10

ApplicationMaster RPC port: 0

queue: root.users.donghua

start time: 1512220404650

final status: UNDEFINED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0025/

user: donghua

17/12/02 08:13:33 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:34 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:35 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:36 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:37 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:38 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:39 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:40 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:41 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:42 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:43 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:44 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:45 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:46 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:47 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:48 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:49 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:50 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:51 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:53 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:54 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:55 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:56 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:57 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:58 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:13:59 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:00 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:01 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:02 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:03 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:04 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:05 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:06 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:07 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:08 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:09 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:10 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:11 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:12 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:13 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:14 INFO yarn.Client: Application report for application_1512169991692_0025 (state: RUNNING)

17/12/02 08:14:15 INFO yarn.Client: Application report for application_1512169991692_0025 (state: FINISHED)

17/12/02 08:14:15 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 192.168.56.10

ApplicationMaster RPC port: 0

queue: root.users.donghua

start time: 1512220404650

final status: SUCCEEDED

tracking URL: http://cdh-vm.dbaglobe.com:8088/proxy/application_1512169991692_0025/

user: donghua

17/12/02 08:14:15 INFO util.ShutdownHookManager: Shutdown hook called

17/12/02 08:14:15 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-4ebbd7d4-e821-4fb7-b0cc-43e574c9f682

[donghua@localhost ~]$ hdfs dfs -cat /user/donghua/speedByDay/part-*

(u'0', 80.42217204861151)

(u'1', 80.42420773058639)

(u'2', 80.516892013888)

(u'3', 80.42997673611161)

(u'4', 80.62740798611237)

(u'5', 80.49621712962933)

(u'6', 80.5453983217595)

No comments:

Post a Comment